经典的WordCount程序源码如下:

1 | package cn.edu.ecnu.spark.example.java.wordcount; |

1 新建maven项目

- 在

idea新建项目:

pom.xml内容如下:

1 |

|

- 更新依赖

2 新建 Java 代码

- 新建包

cn.edu.ecnu.spark.example.java.wordcount,类WordCount:

3 打包

- 打包为

.jar:

4 传送到客户端

- 将打包好的

.jar(位置项目路径\out\artifacts\spark_wordcount_jar)传到客户端的/home/dase-dis/spark-2.4.7/myapp

5 下载测试数据

下载:

wget https://github.com/ymcui/Chinese-Cloze-RC/archive/master.zip解压:

unzip master.zip解压:

unzip ~/Chinese-Cloze-RC-master/people_daily/pd.zip拷贝到集群:

~/hadoop-2.10.1/bin/hdfs dfs -put ~/Chinese-Cloze-RC-master/people_daily/pd/pd.test spark_input/pd.test

6 提交jar任务

删除输出文件夹:

~/hadoop-2.10.1/bin/hdfs dfs -rm -r spark_output提交任务:

~/spark-2.4.7/bin/spark-submit \ --master spark://ecnu01:7077 \ --class cn.edu.ecnu.spark.example.java.wordcount.WordCount \ /home/dase-dis/spark-2.4.7/myapp/spark-wordcount.jar hdfs://ecnu01:9000/user/dase-dis/spark_input hdfs://ecnu01:9000/user/dase-dis/spark_output

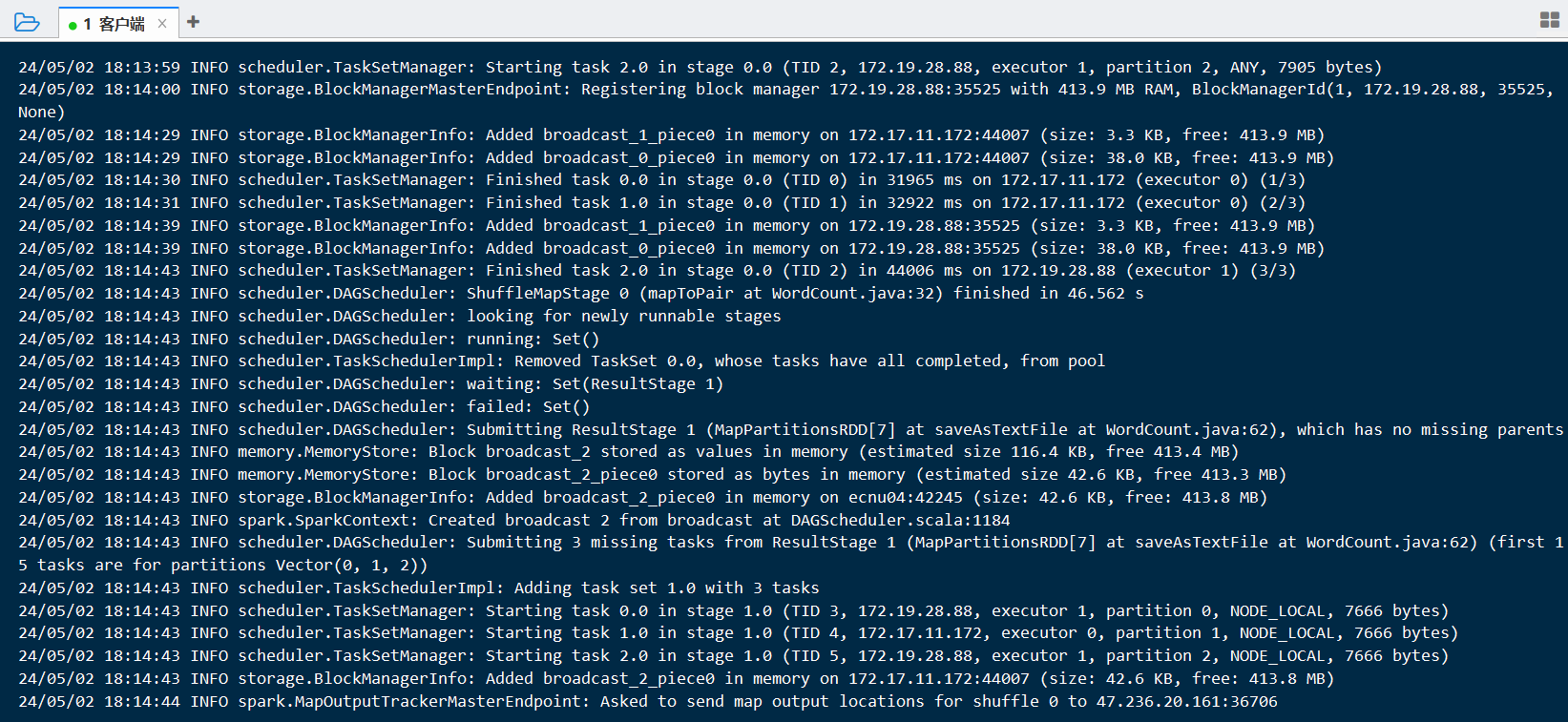

正在运行

顺利跑完

ssh:

webui:

查看output文件夹:

查看part01运行结果:

./hadoop-2.10.1/bin/hdfs dfs -cat /user/dase-dis/spark_output/part-00001